Chapter 3 provided an in‐depth discussion of Azure Databricks, including access tokens. A token is a string value similar to the following, which, when used as part of an operation, validates that the operator is allowed to perform the task:

dapi570e6dffb9ee6b0966a4300c32a5c140-2

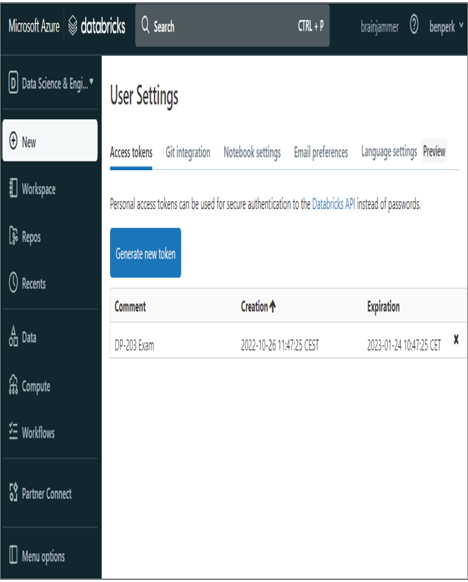

Personal access tokens are generated in the Azure Databricks workspace on the User Settings page, as shown in Figure 8.55. Clicking the Generate New Token button generates a pop‐up window asking for a Comment and a Lifetime (Days) value.

FIGURE 8.55 Generating an access token

The Comment is a description of what the token is used for, and the Lifetime (Days) value is how long the token will be valid. After the time has elapsed, the token will no longer result in successful authentication. To use this token, it is normally passed as a parameter of a REST API. From a command console using curl, you can get a list of the clusters in your Azure Databricks workspace as follows:

This information is helpful for getting an overview of the state of all the clusters for the given workspace. The type of cluster, the state, and the person who provisioned it are all included in the result from the REST API.

Load a DataFrame with Sensitive Information

When data is loaded from a file or table that contains sensitive data, you should mask that data prior to rendering it to the console. The following code places a mask on a column that contains an email address. The output of the SUBJECTS.parquet file, located in the Chapter08 directory on GitHub, follows the code snippet.

Masking data like government ID numbers, address details, phone numbers, and email addresses should be standard practice. No longer should you render any of these PII details to a console or application by default. Sensitivity labels, as you learned previously, should be applied in all cases and used for the basis of determining permissions for viewing unmasked data. A file named mask.ipynb containing the code snippet is in the Chapter08 directory on GitHub.

Write Encrypted Data to Tables or Parquet Files

The previous section discussed masking data that is loaded in a DataFrame and then rendered to the console. Using that masking technique in combination with a following encryption feature would render a high level of data protection. You could effectively store the encrypted values of all PII data residing on your data tables or in your data files. Then, when the data is retrieved and the permissions justify the decryption, you can decrypt the data and apply the mask, again based on the permissions granted to the client retrieving the data. Numerous libraries and encryption algorithms can be used to encrypt data. One of the more popular libraries for use with Python is Fernet (https://cryptography.io/en/latest/fernet). The following code is an example of how to encrypt and decrypt a column contained within a Parquet file:

The SUBJECTS.parquet file is located in the Chapter08 directory on GitHub, as is a file named encryptDecrypt.ipynb, which includes the preceding code snippet. The first portion of the code imports the Fernet library and generates an encryption key. The encryption key resembles the following and is used for encrypting and decrypting the BIRTHDATE column: MHfAzNysASlvESYfPumo4JiJmBRZm5Dpj2g8ovGGdMg

This is an important point regarding the initial code snippet, where the key is generated with each invocation of the code. If you encrypt the data and do not store the key, then you cannot decrypt it. If you want to encrypt the data prior to rendering it to the output without the intention of storing the encrypted values, then there is no need to store the key. However, if you do plan to store the data on a table using the saveAsTable() method or into a Parquet file, then you need to securely store the key and retrieve it when you want to decrypt the data. This is an example of a scenario where Azure Key Vault can be used to store the encryption key for later usage.